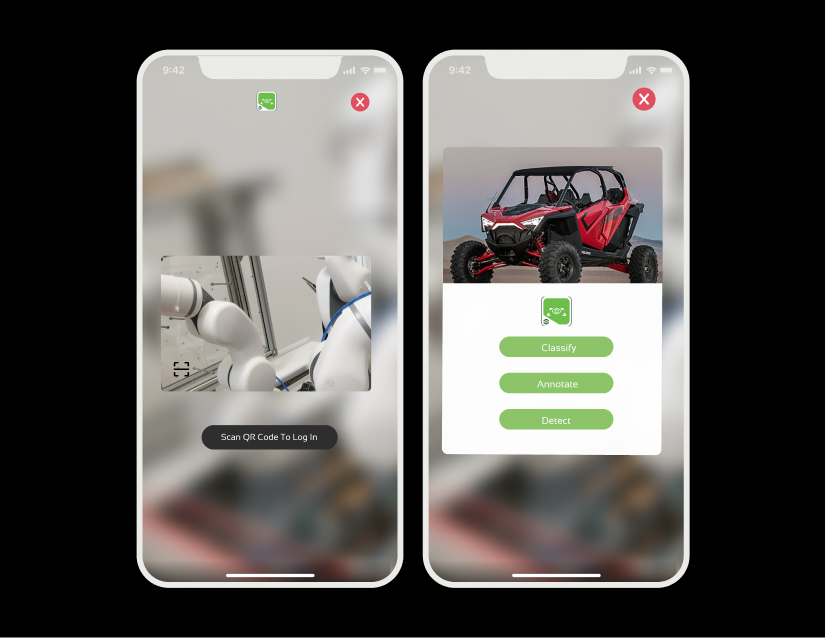

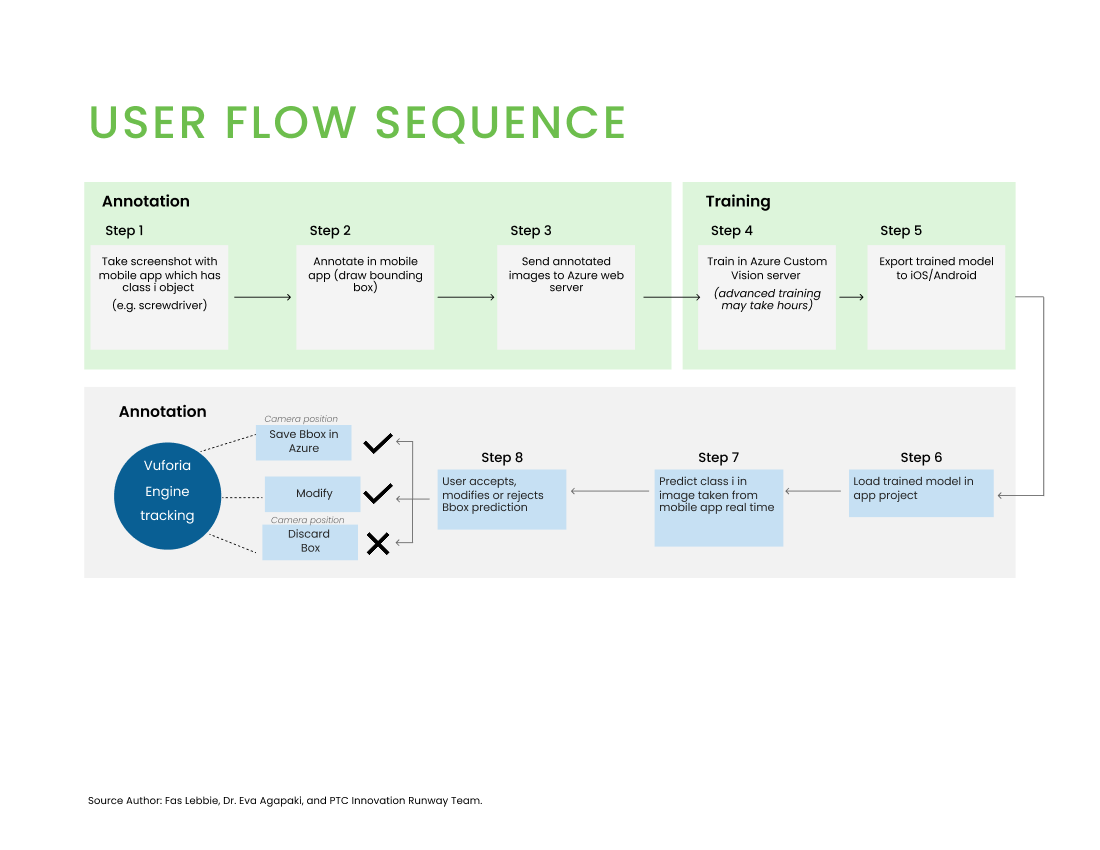

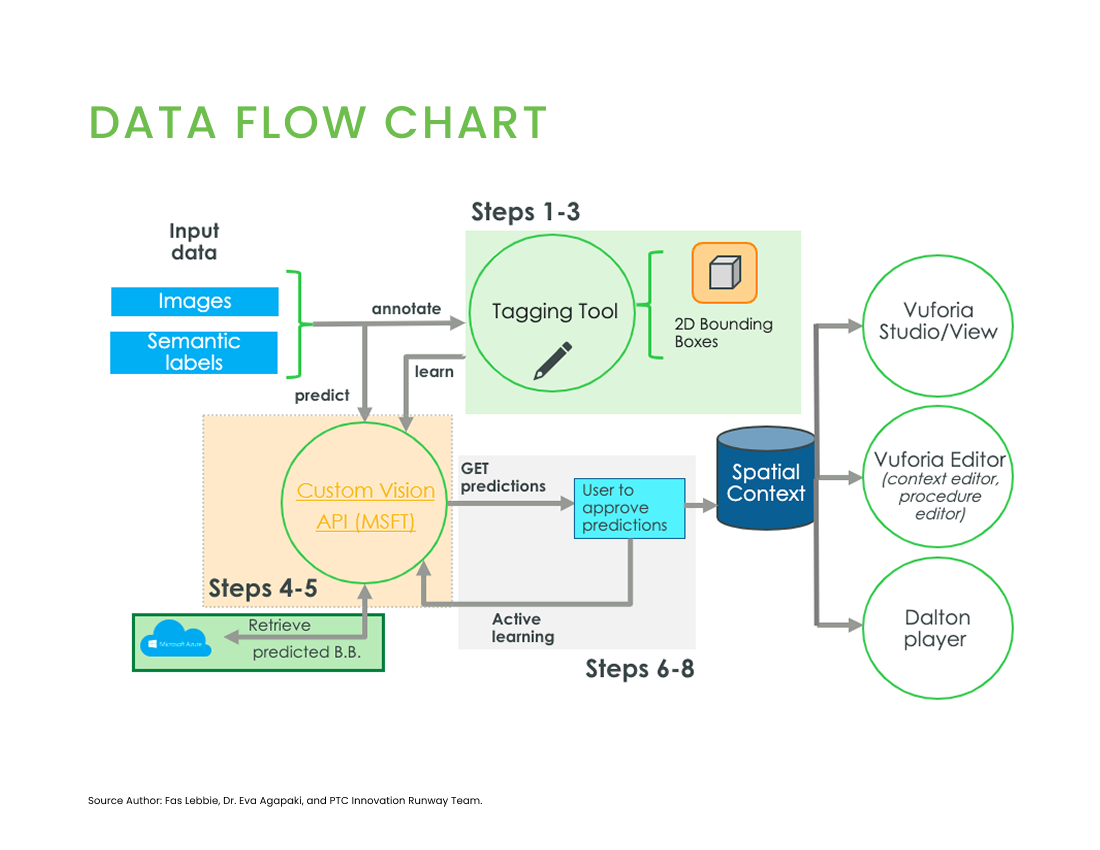

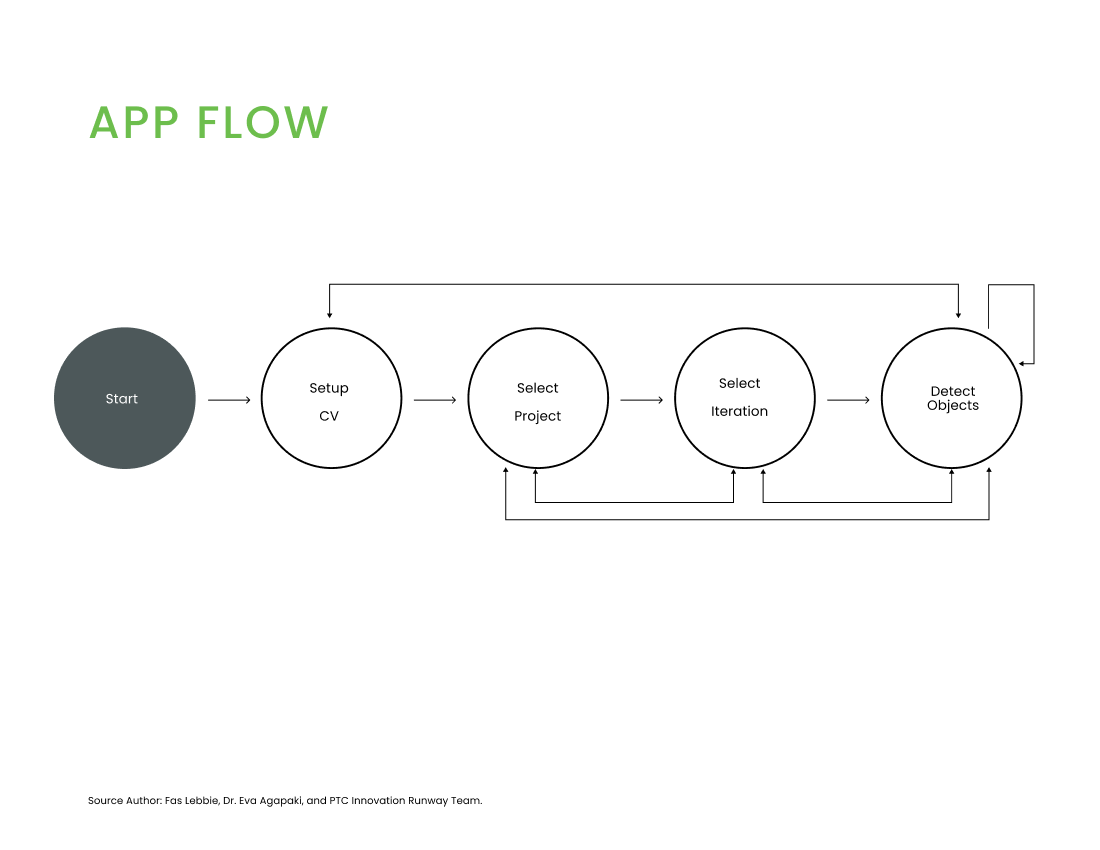

Our prototyping process emphasized developing a seamless user experience across multiple platforms while building a robust AI recognition system. We created a detailed user flow sequence that included both the annotation and training phases:

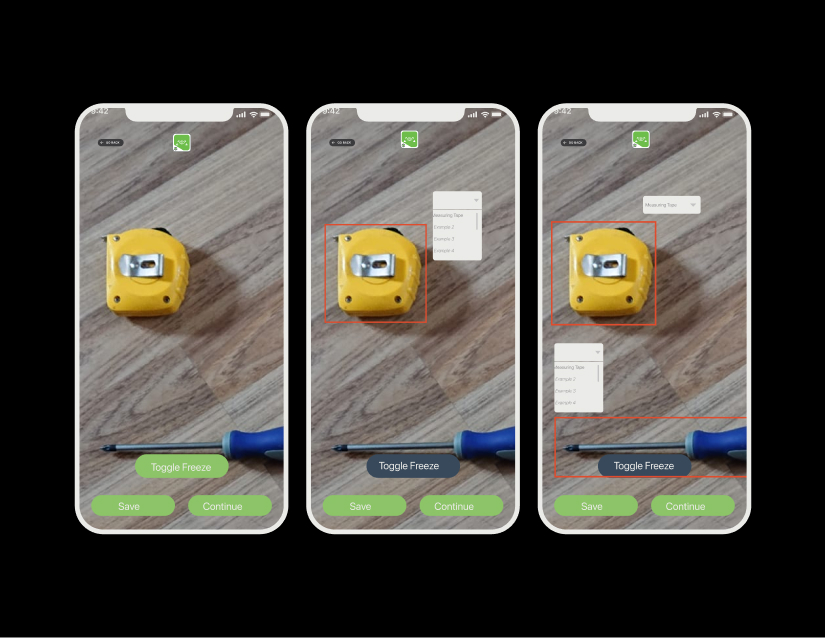

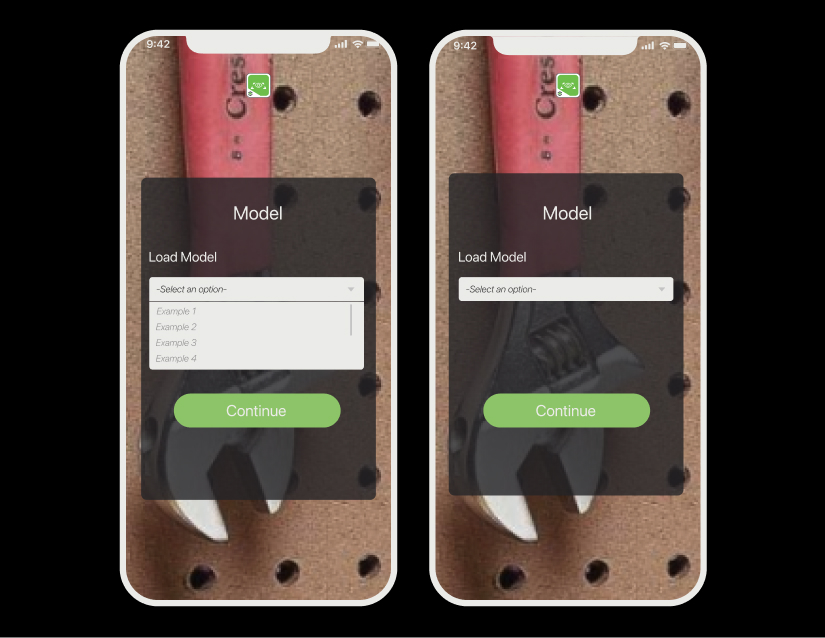

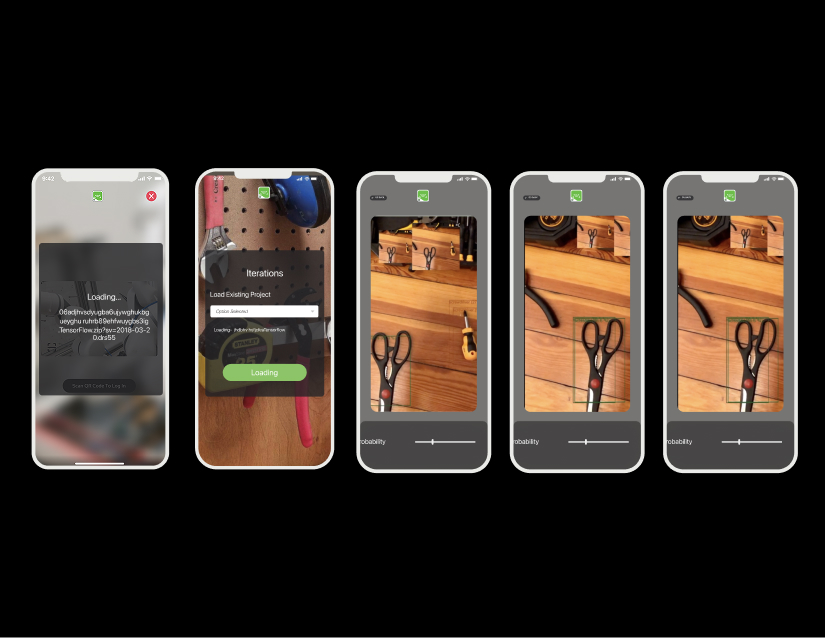

Annotation Flow (Steps 1-3):

- Take a screenshot with a mobile app that has a class 1 object (e.g., screwdriver)

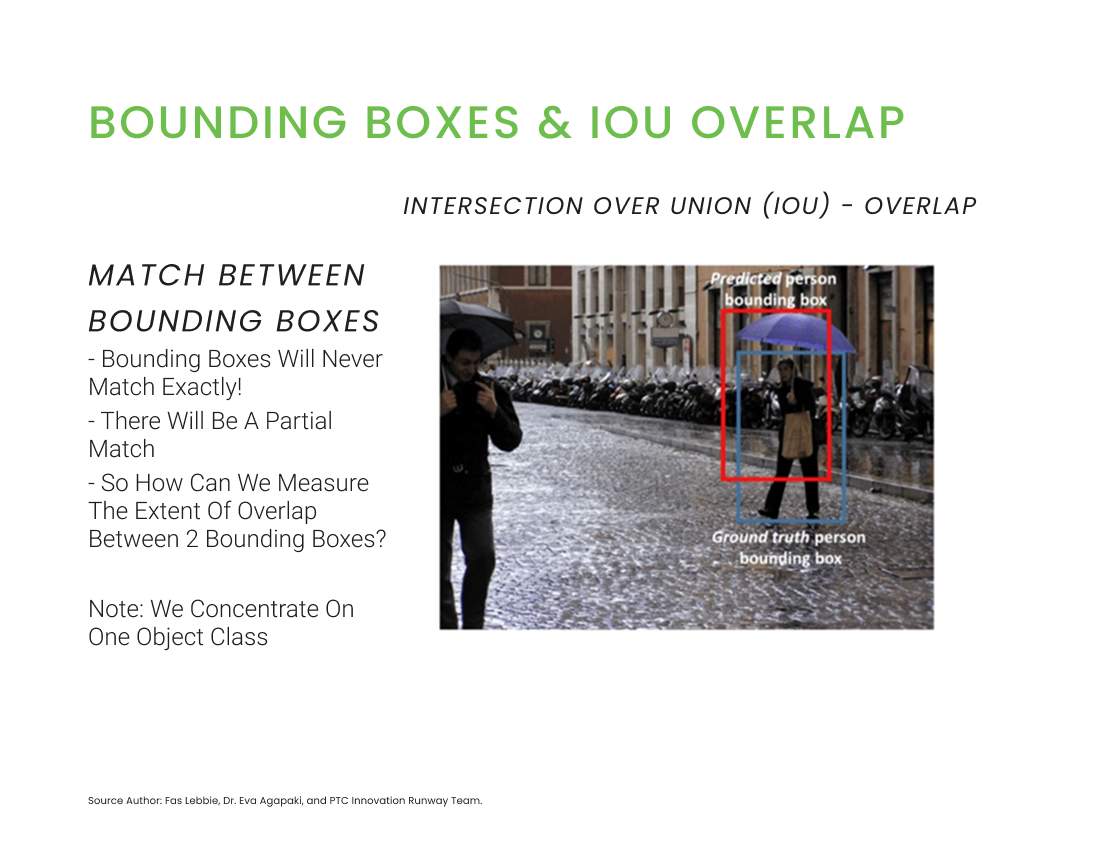

- Annotate in the mobile app (draw bounding box)

- Send annotated images to the Azure web server

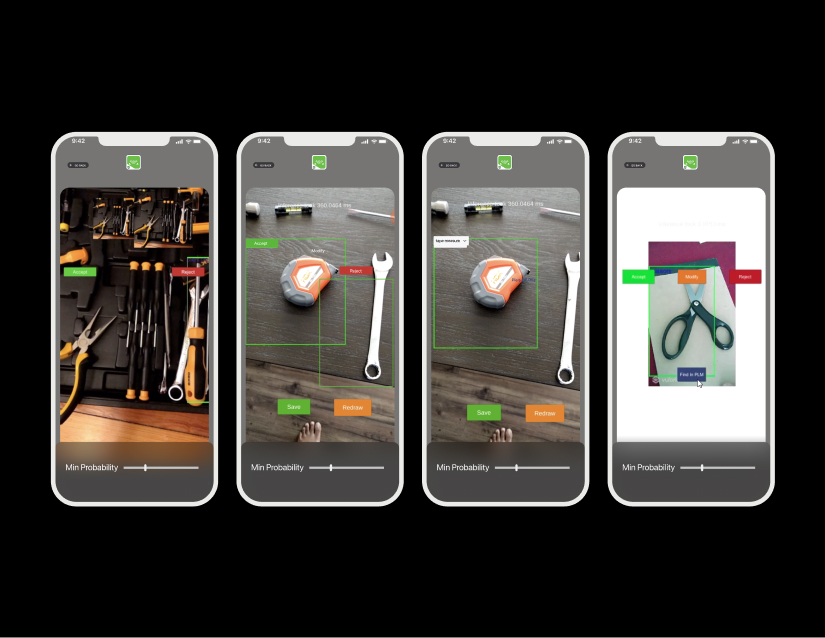

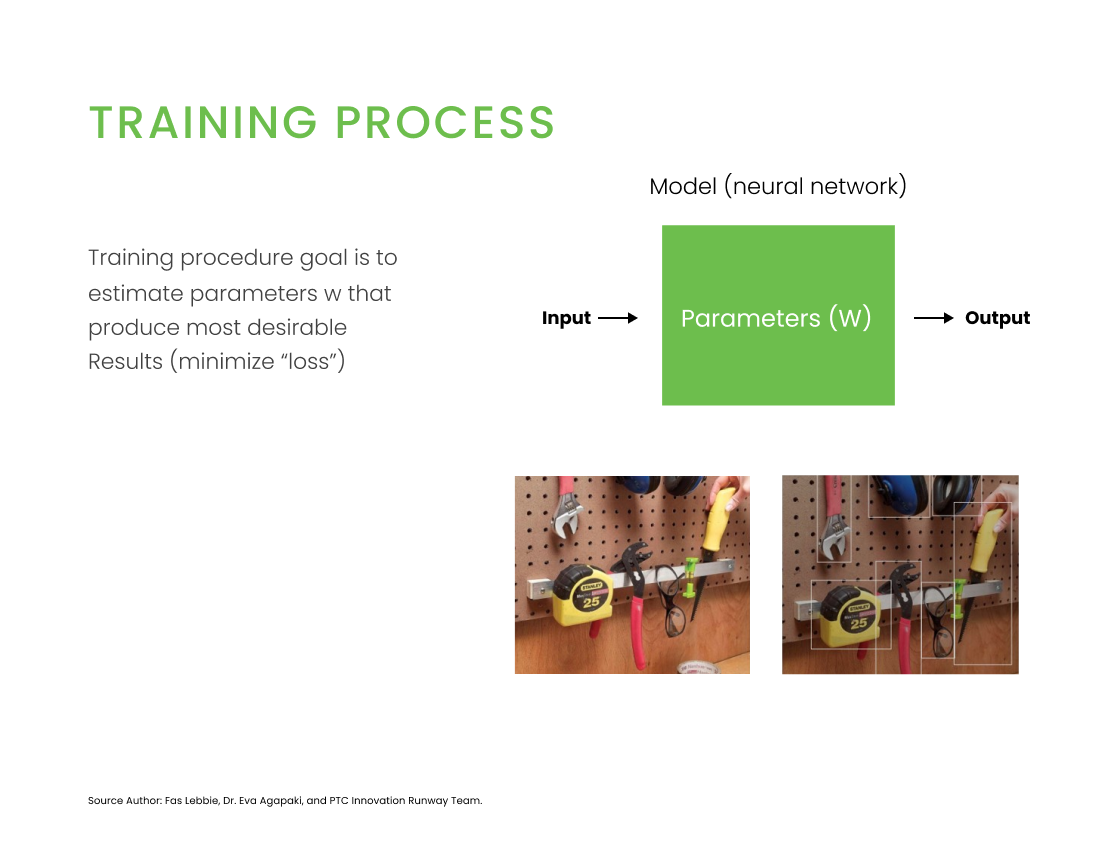

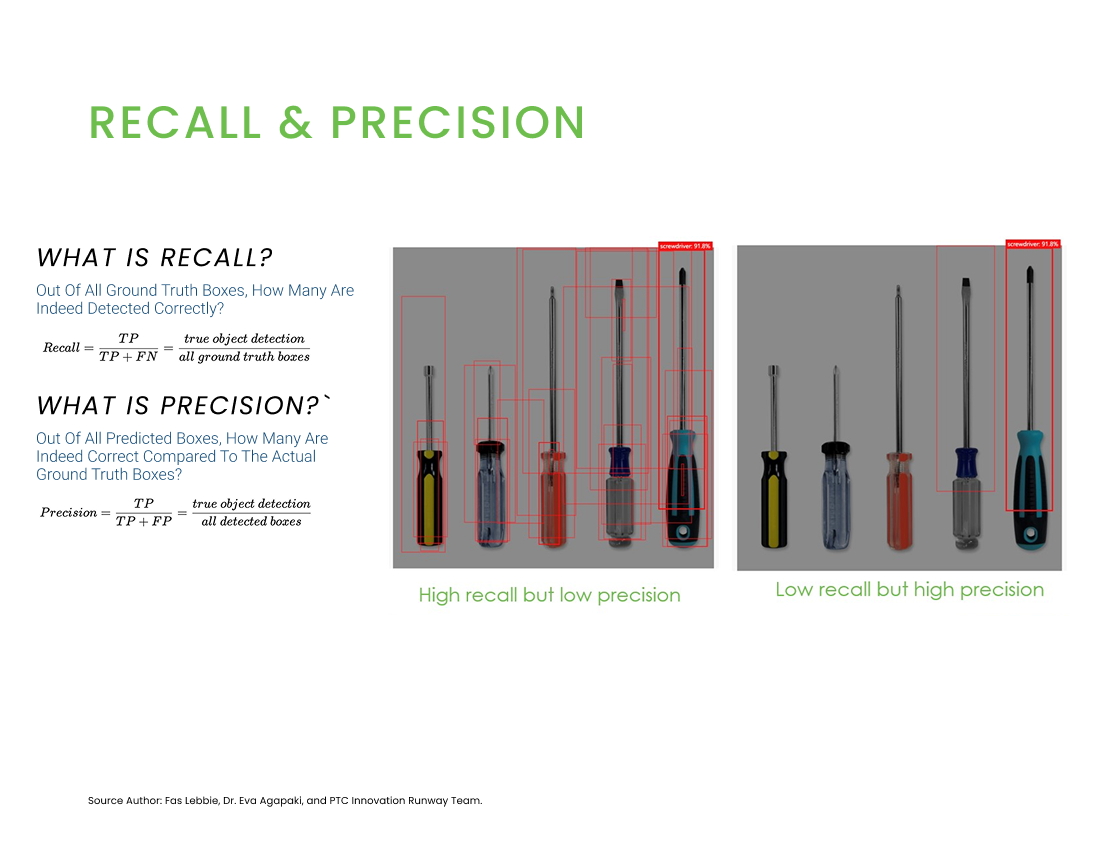

Training Flow (Steps 4-8): 4. Train in Azure Custom Vision server (advanced training may take hours) 5. Export trained model to iOS/Android 6. Load the trained model in app project 7. Predict class in an image taken from the mobile app in real-time 8. The user accepts, modifies, or rejects bounding box prediction

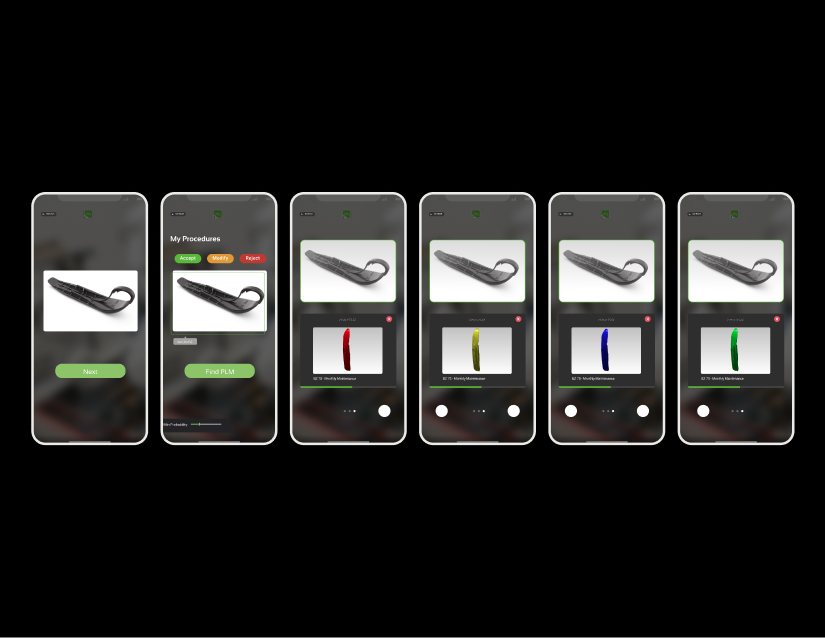

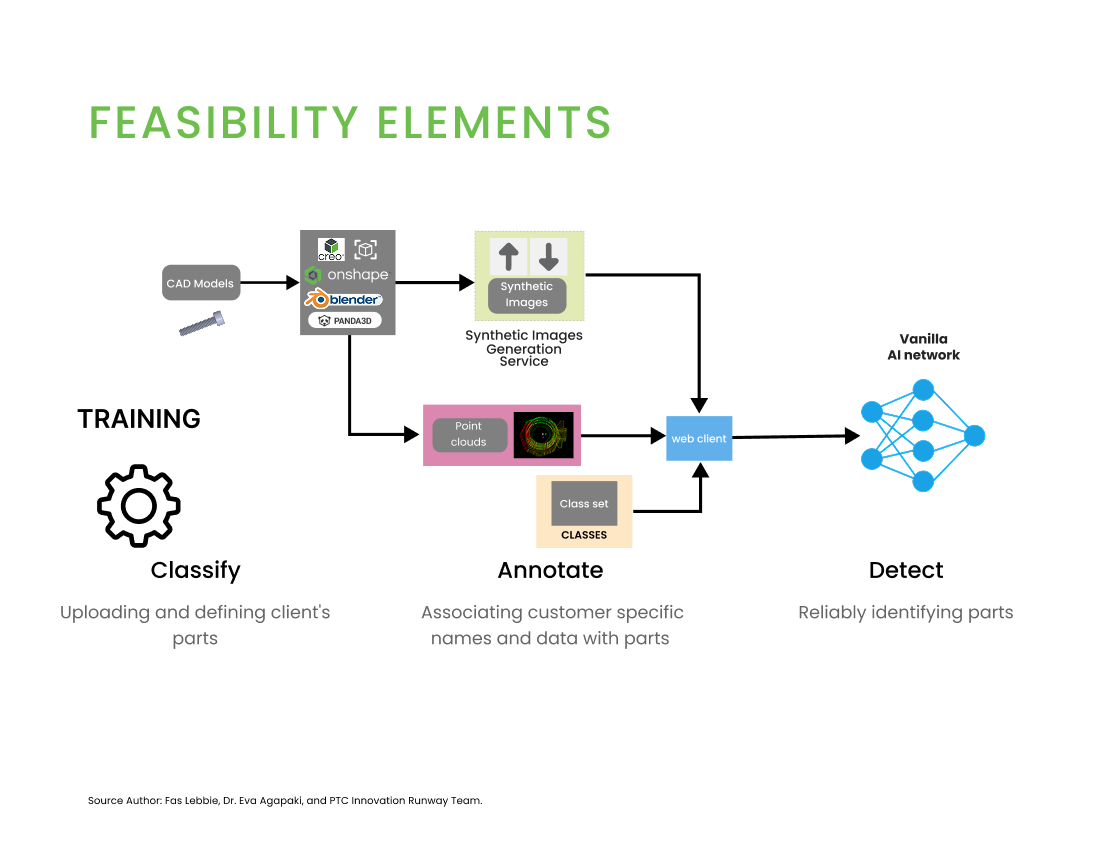

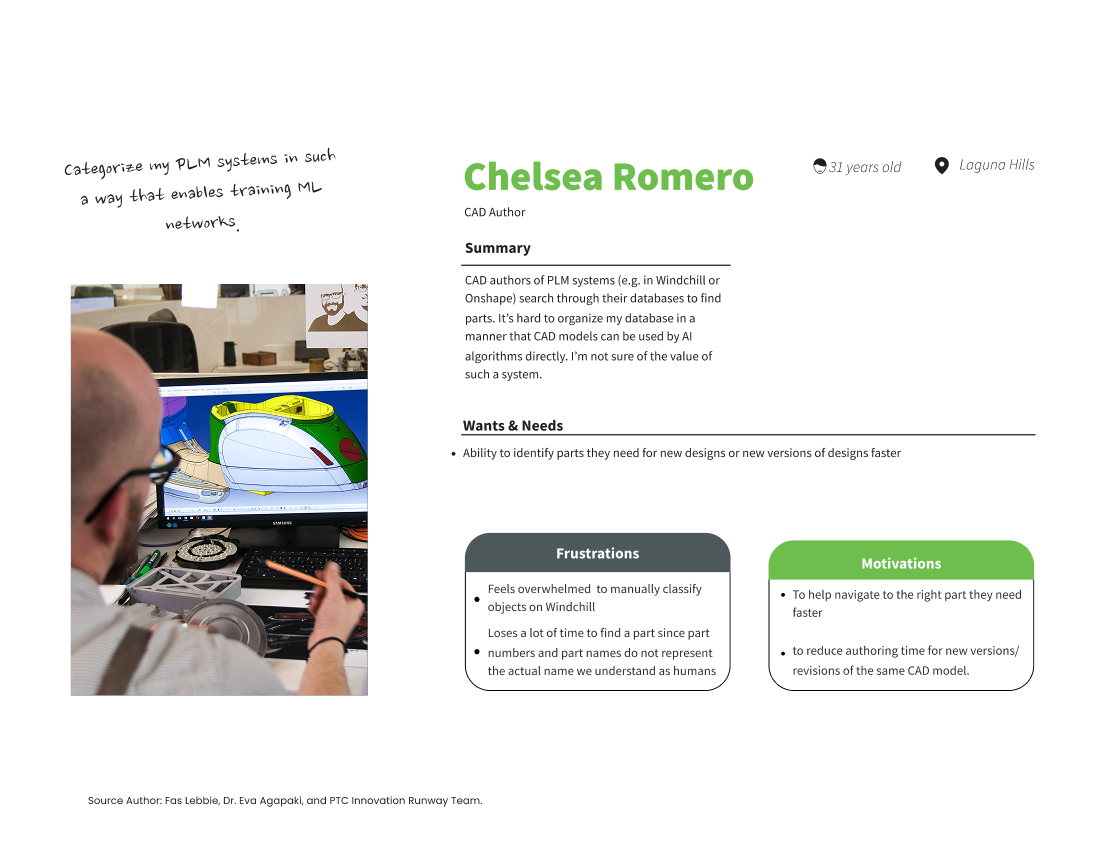

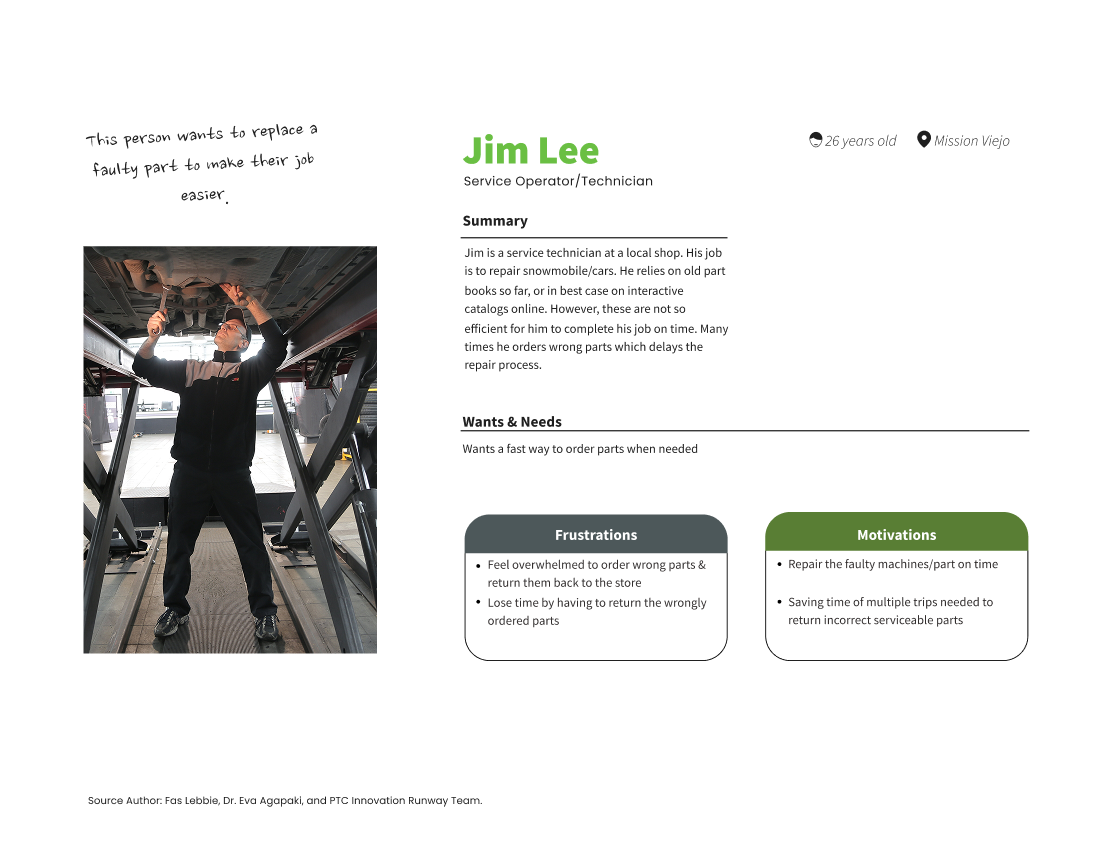

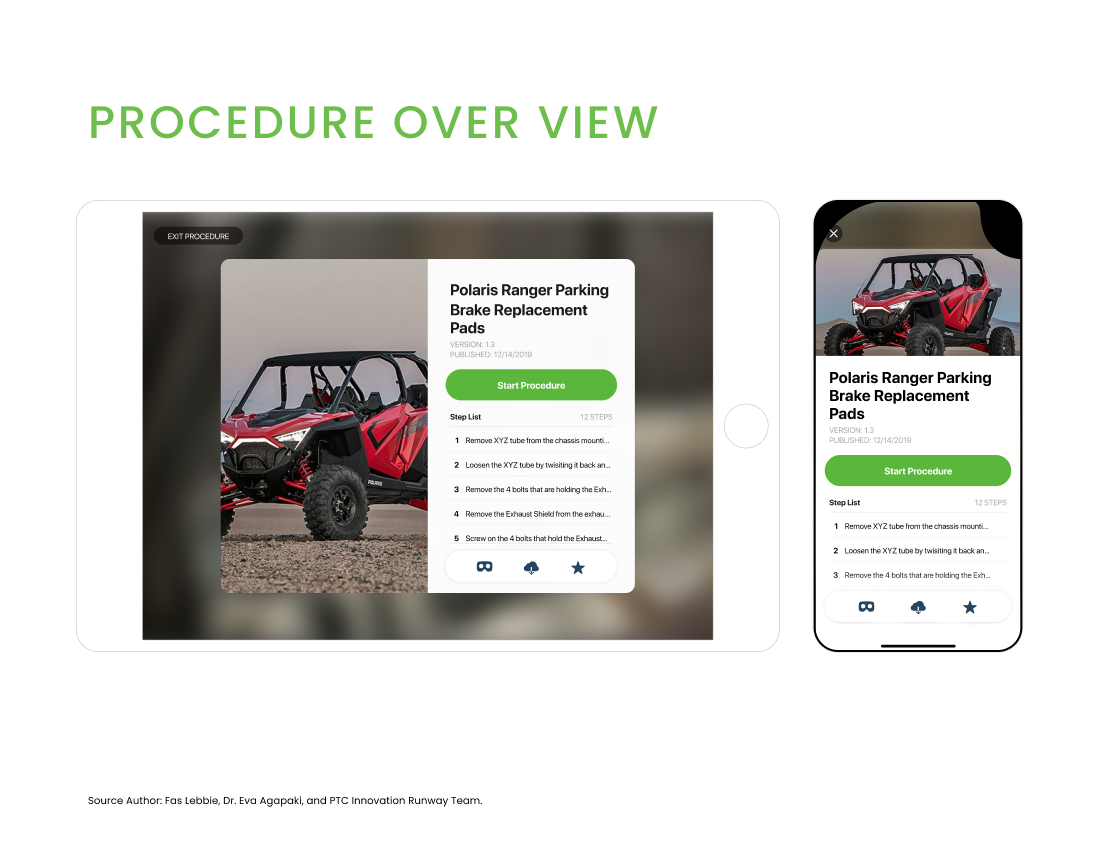

We developed an AI training model for the backend utilizing tools like Onshape, Blender, and ParaSOLID to generate synthetic images and point clouds, which fed into a vanilla AI network for classification. Instead of using traditional annotation methods, we developed the RTO2-3D network, which was trained on both images and point cloud data. For the front-end user experience, I designed high-fidelity interfaces for mobile devices, tablets, and RealWear AR headsets, adhering to platform-specific best practices. For mobile, we focused on 2D interactions, allowing users to act as a “magic hand,” making inputs into the semi-real world. For tablets, we incorporated placeable pins on CAD models to help users make annotations, ensuring clickable functions were within reach. For RealWear AR, we limited background functions to preserve battery life. Our implementation strategy was structured with clear execution parameters:

Time:

- 6 months for gradual adoption with Vuforia Studio widgets

- 1 year for RTOD services suite

- Across four sprint phases

Budget:

Human Resources:

Working within these parameters, we successfully delivered a product with four unique characteristics:

- Efficient: Instantaneous predictions with constant improvement

- Versatile: Adaptable to customer environments

- Persistent: Constant predictions around the 3D space

Cross-platform: Integration between Unity Engine and Vuforia Studio